I’ve been getting into drawing dungeons on isomorphic grids. It’s fun but I was a little frustrated with the process of sourcing and printing out graph paper with an isomorphic grid on it. You have two bad options, basically:

- Download a low-res JPG you found on Google Image search where the grid sizes are wonky and it has a giant URL on it. I needed something clean and simple.

- Buy grid paper on Amazon in bulk. Now I’m thinking about centimeters vs. inches, cost, color, paper size, style, and quantity before I draw a single dungeon. I needed something ad hoc and with less commitment.

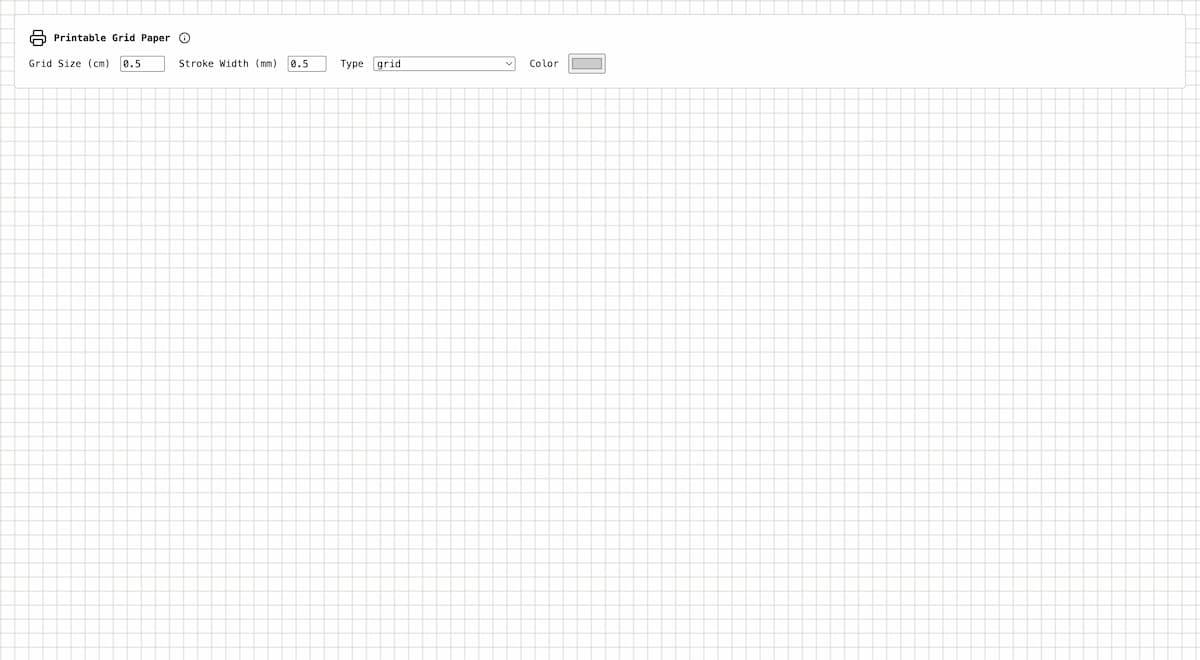

After 15 minutes of getting frustrated I said “I can build this.” And so I did using HTML, CSS, and the tiniest bit of JavaScript. And because it’s a webpage… why limit myself to one kind of grid? I’m able to support ~7 grids types using different kinds of background gradients:

- Grids

- Dot Grids

- Isomorphic Grids

- Isomorphic Dot Grids

- Dual Hex Grids

- Perspective Grids

- Two-Point Perspective Grids

Feeling good about this little tool. The quality can be a bit blurry but background gradients and printers are weird. It’d be nice to make it crisper and I might put some effort at the task (use SVG patterns?)… but at less than 5kb and a couple of nights worth of work, I’m happy with the result. It “made the rounds” as they say on the socials and seems to be a common issue other people experience, so I’ll call that a success.